A conspicuous ability of the mammalian brain to integrate and process huge amount of spatial, visual and temporal stimuli is a result of its enormous structural complexity functioning in an integrated way as a whole. Here we review recent achievements in the understanding of brain structure and function. A traditional view on the brain as a network of neurons has been extended to the more complicated structure including overlapping and interacting networks of neurons and glial cells. We discuss artificial versus natural neural networks and consider a concept of attractor networks. Moreover, we speculate that each neuron can have an intracellular network on a genetic level, based and functioning on the principle of artificial intelligence. Hence, we speculate that mammalian brain is, in fact, a network of networks. We review different aspects of this structure and propose that the study of brain can be successful only if we utilize the concepts recently developed in nonlinear dynamics: the concept of integrated information, emergence of collective dynamics and taking account of unexpected behavior and regimes due to nonlinearity. Additionally, we discuss perspectives of medical applications to be developed following this research direction.

Introduction

The human brain is probably the most complex structure in the known universe and for a long time now it has been recognized as a network of communicating compartments functioning in an integrated way as a whole. At a reductionist level, the brain consists of neurons, glial cells, ion channels, chemical pumps and proteins. At a more complex level, neurons are connected via synaptic junctions to compose networks of carefully arranged circuits. Although taking the reductionist approach and considering one cell at a time has led to some of the most important contributions to our understanding of the brain (Kandel et al., 2000), it is yet to be discovered how the brain completes such complex integrated operations that no artificial system has been yet developed to rival humans in recognizing faces, understanding languages or learning from experience (Granger, 2011). Computational neuroscience aims to fill this gap by understanding the brain sufficiently well to be able to simulate its complex functions, in other words, to build it.

As the brain in itself appears to be organized as a complicated system, understanding its function would be impossible without systems approach translated from the theory of complex systems. We suggest that this systems approach should only work if it were based on the correct understanding of the brain as an integrated structure including an overlap and interaction of multiple networks. In this review we will discuss the hypothesis that the brain is a system of interacting neural and glial networks with a neural or genetic network inside each neuron, and all of these overlapping and interacting networks play an important role in encoding a vast and continuous flow of information that the mammals process every second of their conscious living. The review is structured as the following. Firstly, we shall review a small, but important for our purposes, fragment of the historical account of how the brain came to be understood as a structure that can be simulated by the use of artificial networks, as well as the state-of-the-art research on neural networks, the attractor networks. Next, we shall discuss the glial network underlying the neural one and discuss the neural-glial interaction and growing evidences of the fact that glial cells take irreplaceable part in information encoding. We then introduce a novel hypothesis that a genetic network inside each neuron may also take part in information encoding based on the perceptron and learning principles, in fact, presenting a kind of intelligence on the intracellular level. Lastly, we conclude with the medical applications of the recent theoretical frameworks and discuss several important concepts that should be followed to proceed with the brain research.

Brain as a neural attractor network

Since the earliest times, people have been trying to understand how the behaviour is produced. Although some of the oldest accounts of hypotheses intending to explain behaviour date back as far as ca. 500 B.C. (e.g. Alcmaeon of Croton and Empedocles of Acragas), the Greek philosopher Aristotle (348-322 B.C.) was the first person to develop a formal theory of behaviour. He suggested that a nonmaterial psyche, or soul, was responsible for human thoughts, perceptions and emotions, as well as desires, pleasure, pain, memory and reason. In Aristotle’s view, the psyche was independent of the body, but worked through the heart to produce behaviour whereas the brain’s main function was simply to cool down the blood. This philosophical position that human behavior is governed by a person’s mind is called mentalism, and Aristotle’s mentalistic explanation stayed almost entirely unquestioned until the 1500s when Descartes (1596-1650) started to think about the idea that the brain might be involved in behavior control. He developed a theory that the soul controls movement through its influence on the pineal body and also believed that the fluid in the ventricles was put under different pressures by the soul to create movement. Although his hypotheses were consistently falsified later, as well as originated in the well-known mind-body problem (How can a nonmaterial mind produce movements in a material body?), Descartes was one of the first people to suggest that changes in the brain dynamics may be accompanied by changes in behaviour.

Since Descartes, the study of behaviour by focusing on the brain went a long way from early ideas such as phrenology (i.e., depressions and bumps on the skill indicate the size of the structure underneath and correlate with personality traits) developed by Gall (1758-1828) to the first attempts to produce behaviour-like processes using artificial networks (Mcculloch & Pitts, 1990; Mimms, 2010). Initially, classical engineering and computer approaches to artificial intelligence contributed to stimulating essential processes such as speech synthesis and face recognition. Although, some of these ideas and efforts have led to the invention of software that now allows people with disabling diseases like Steven Hawking to communicate their ideas and thoughts using speech synthesizers, even the best commercially-available speech systems cannot yet convey emotions in their speech through a range of tricks that the human brains use essentially effortlessly (Mimms, 2010).

The modern field of neural networks was greatly influenced by the discovery of biological small-scale hardware in the brain. The very basic idea that computational power can be used to simulate brains came from appreciating the vast number, structure and complex connectivity of specialized nerve cells in the brain - neurons. There are about ten billion neurons in the brain, each possessing a number of dendrites that receive information, the soma that integrates received impulses and then makes a decision in a form of an electrical signal or its absence and one axon, by which the output is propagated to the next neuron. The inputs and outputs in neurons are electrochemical flows, that is moving ions; and can be stimulated by environmental stimuli such as touch or sound. The changes in electrochemical flow come from the activation of a short lasting event in which the electrical membrane potential of a cell suddenly reverses in contrast to its resting state, which further results in propagation of the electrical signal. Importantly, there is no intermediate state of this activation: the electrical threshold is either reached allowing the propagation of signal or it is not. This property allows for a straightforward formulation of a computational output in terms of numbers, that is 1 or 0. Further influence from neurobiology on the artificial intelligence work came from the idea that information can be stored by modifying the connections between communicating brain cells, resulting in the formation of associations. Hebb was the first one to formalize this idea by suggesting that the modifications to connections between the brain cells only take place if both the neurons are simultaneously active (Hebb, 1949). That is “Cells that fire together, wire together” (Shatz, 1992).

Neurobiologists have in fact discovered that some neurons in the brain have a modifiable structure (Bliss & Lomo, 1973) stirring the neural network research to developing the theoretical properties of ideal neural and actual properties of information storage and manipulation in the brain. The first attempt to provide a model of artificial neurons that are linked together was made by McCulloch and Pitts in 1943. They initially developed their binary threshold unit model based on the previously mentioned on-off property of neurons and signal propagation characteristics, suggesting that the activation of the output neuron is determined by multiplying the activation of each active input and checking whether this total activation is enough to exceed the threshold for firing an electrical signal (McCulloch & Pitts, 1990; Mimms, 2010). Later neuronal models based on the McCulloch-Pitts neuron, adopted the Hebb’s assumption and included the feature that the connections can change their strength according to experience so that the threshold for activation can either increase or decrease.

By creating artificial neurons, researchers face an advantage of reducing the biological neurons, which are very complex, to their component parts that are less complex, allowing us to explore how individual neurons represent information but also examine the highly complex behaviours of neural networks. Furthermore, creating artificial neural networks and providing them with the problems that a biological neural network might face, may provide some understanding of how the function arises from the neural connectivity in the brain.

Recently an inspiring computational concept of attractor networks has been developed to contribute to the understanding of how space is encoded in the mammalian brains. The concept of attractor networks originates from the mathematics of dynamical systems. When the system consisting of interacting units (e.g. neurons) receives a fixed input over time, it tends to evolve over time toward a stable state. Such a stable state is called an attractor because small perturbations have a limited effect on the system only, and shortly after the system tends to converge to the stable coherent state. Therefore, when the term ‘attractor’ is applied to neural circuits – it refers to dynamical states of neural populations that are self-sustained and stable against small perturbations. As opposed to the more commonly used perceptron neuronal network (Rosenblatt, 1962), where the information is transferred strictly via the feed-forwarding processing, the attractor network involves associative nodes with feedback connections, meaning the flow of information is recurrent and thus allowing for the modification of the strength of the connections. The architecture of an attractor network is further shown in Fig. 1.

Figure 1. The architecture of attractor network.

Due to the recent discoveries of cells that specialized in encoding and representing space, studying spatial navigation is now widely regarded as a useful model system to study the formation and architecture of cognitive knowledge structures, a function of the brain no machine is yet able to simulate. The hippocampal CA3 network, widely accepted to be involved in episodic memory and spatial navigation, has been suggested to operate as a single attractor network (Rolls, 2007). The anatomical basis for this is that the recurrent collateral connections between the CA3 neurons are very widespread and have a chance of contacting any other CA3 neurons in the network. The theory behind this is that the widespread interconnectivity allows for a mutual excitation of neurons within the network and thus can enable a system to gravitate to the stable state, as suggested by the attractor hypothesis. Moreover, since attractor properties have been suggested to follow from the pattern of recurrent synaptic connections, attractor networks are assumed to learn new information presumably through the Hebb rule. The Hebb rule was suggested by Donald Hebb in 1949 in his famous ‘Organization of Behavior’ book where he claimed that the persistence of activity tends to induce long lasting cellular changes such that ‘When an axon of cell A is near enough to excite a cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased’. The hippocampus is in fact highly plastic, as evidenced by the tendency for its synapses to display changes in strength, either downwards (long-term depression) or upwards (long-term potentiation), in response to activity patterns in its afferents (Malenka & Bear, 2004).

In 1971 John O’Keefe and Jonathan Dostrovsky recorded activity of individual cells in the hippocampus when an animal freely moved around a local environment and found that certain neurons fired at a high rate only when a rat was in a particular location. As the firing of these cells correlated so strongly with the rat’s location in the environment they were named ‘place cells’. May-Britt Moser and Edvard Moser further investigated the inputs of place cells from the cells one synapse upstream in the entorhinal cortex and discovered a type of neurons that were active in multiple places that formed a regular array of the firing field together. The researchers further suggested that these cells served to maintain metric information and convey it to the place cells to allow them to locate their firing fields correctly in the space. Physiological observations of place and grid cells suggest the two kinds of dynamics: discrete and continuous (Jeffery, 2011). Discrete attractor network enables an animal to respond to large changes in the environment but resist small changes, whereas continuous dynamics enables the system to move steadily from one state to the next as the animal moves around the space. Since a discrete system can account for the collective firing of cells at a given moment and a continuous system allows for a progression of activity from one state to another as the animal moves, Jeffery further suggested the two attractor systems must be either localized on the same neurons or be separate but interacting (Jeffery, 2011). The possibility discussed here is that the source of discrete dynamics lies in the place cell network, whereas continuous dynamics originates upstream in the entorhinal grid cells.

In the discrete attractor network, the system moves from one state to another abruptly and seems to do so only when there is large perturbation. Experimental evidence for the existence of discrete networks was originally provided by the phenomenon of remapping. Remapping is the phenomenon that is manifested by a modulation of spatial activity of place cells as a result of an abrupt change in the environment, and when the whole observed place cell population alters their activity simultaneously in response to a highly salient change, it is referred to as ‘complete’ or ‘global’ remapping. Wills et al. manipulated the geometry of the enclosure where the rat was placed by altering its squareness or circularity (Wills et al., 2005). The global remapping only occurred when cumulative changes were sufficiently great and no incremental changes in place cell firing were observed in the intermediate stages, suggesting that the place cell system required significantly salient input to change its state. These findings comprise the evidence for the discrete attractor dynamics in the system, as the system can resist small changes because the perturbation is not large enough for a system to change from one state to another.

Figure 2. (A) Basic scheme of a classifier that gives an output in response to two stimuli. (B) Learning the correct classification between two linear classes of red and green points. Two initially misclassified red points will be classified correctly after learning.

On the other hand, Leutgeb et al. (Leutgeb et al., 2005) used a similar procedure and found that a gradual transformation of the enclosure from circular to squared and vice versa resulted in gradual transitions of place cell firing pattern from one shape to another. The existence of these transitional representations does not, however, disprove the existence of attractor networks, but suggests that the place cells can incorporate new information, which is incongruent with previous experience into a well-learned spatial representation. Furthermore, different attractor dynamics can represent this property of the place cells – the continuous attractor. This continuous attractor can be conceptualized as the imaginary ball rolling across a smooth surface, rather than a hilly landscape. The ‘attractors’ in this network are now the activity patterns that pertain across the active cells when the animal is at one single place in the environment and any neuron that is supposed to be a part of this state, in that particular place, will be pulled into it and held by the activity of other neurons that it is related to (Jeffery, 2011).

The challenge for the attractor hypotheses comes from the notion of partial remapping. Partial remapping, analogously to global remapping, occurs when environmental change is presented in the environment but contrary to global remapping only some cells change their firing patterns whereas others retain their firing pattern. The difficulty that partial remapping introduces to the attractor model lies in the fact that the defining feature of the attractor network is the coherence of the network function whereas partial remapping represents a certain degree of disorder. Touretzky and Muller (Touretzky & Muller, 2006) suggested a solution to this problem by supposing that under certain circumstances attractors can break into subunits so that some of the neurons belong to one attractor system and others to the second one. However, (Hayman et al., 2003) varied the contextual environment by manipulating both colour an odour and found place cells to respond to different combinations of these contexts essentially arbitrarily. The five cells that they recorded in their study clearly responded as individuals and thus at least five attractor subsystems were needed to explain this behaviour. Nevertheless, Jeffery suggested that once there is a need to propose as many attractors as there are cells, the attractor hypothesis starts to lose its explanatory power (Jeffery, 2011).

Jeffery proposed that one solution to this is to suppose that attractors are normally created in the place cell system but the setup of the experiment, where neither of the two contextual elements ever occurred together, has created the pathological situation resulting in the ability to discover attractor states to be undermined (Jeffery, 2011). Thus, the fragmented nature of the environment in this experiment could have disrupted the ability of neurons to act coherently, and thus acting independently, suggesting partial remapping to be a reflection of a broken attractor system. Nevertheless, partial remapping reflects a disruption in the discrete system only whereas continuous dynamics seems to be intact and the activity of the cells remains to be able to move smoothly from one set of neurons to the other, despite the fact that some of the neurons seem to belong to one network and some to another. Therefore, it appears that the continuous and discrete attractor dynamics might originate in different networks.

One potential hub for the continuous attractor dynamics origin has been suggested to be in the grid cells of the entorhinal cortex (Jeffery, 2011). Grid cells have certain characteristics that make them perfect nominees for the continuous attractor system. Firstly, grid cells are continuously active in any environment and, as far as we know preserve their specific firing, regardless of the location with respect to the world outside. The following suggests the dynamics where the activity of these cells is modulated by movement but is also simultaneously reinforced by inherent connections in the grid cell network itself. Additionally, evidence suggests that each place cell receives about 1200 synapses from grid cells (de Almeida et al., 2009) and the spatial nature of grid cells makes these cells ideal candidates to underlie place field formation and providing the continuous attractor dynamics to the subsequent behavioural outcome.

Like place cells, grid cells also have the tendency to remap, although the nature of this remapping is very different. For instance do not switch their fields on and off as the place cells do. Rather, remapping in grid cells is characterized by the rotation of the field. Interestingly, experiments have shown that grid cell remapping only occurs when the large changes are made to the environment and is accompanied by global place cell remapping, whereas small changes caused no remapping at all in grid cells and rate remapping (intensity of firing) in the place cell system (Fyhn et al., 2007). These results highlight some of the problems of the theory that place fields are generated from the activity of grid cells. Firstly, if grid cells are the basis for the place cell generation, the coherency of grid cell remapping should be accompanied by the homogeneity of place fields remapping. Furthermore, the rotation and translation of a grid array should respectively cause an analogous rotation and transition in the global place field population, which clearly does not happen. Besides, the partial remapping problem discussed earlier in this essay seems hard to explain by grid cells. If the place cell activity is generated from the grid cells, then there should be evidence for partial remapping in the grid cell population. Nonetheless, there is no published data to support the existence of partial remapping in the grid cell system yet (Hafting et al., 2005).

Jeffery and Hayman proposed a possible solution to these problems in 2008 (Hayman & Jeffery, 2008). They presented a ‘contextual gating’ model of grid cell/place cell connectivity that may be able to explain how the heterogeneous behaviour of place cells may arise from the coherent activity of grid cells. In this model, the researchers have suggested that in addition to grid cell projections to place cells there is also a set of contextual inputs converging on the same cells. The function of these inputs is to interact with the spatial inputs from the grid cells and decide which inputs should project further onto the place cell. Thus, when the animal is presented with a new set of spatial inputs, a different set of context inputs is active and these gate a different set of spatial inputs, whereas when a small change is made, some spatial inputs will alter, whereas others will remain the same producing partial remapping. The most important feature of this model is thus that it explains how partial remapping may occur in a place cell population while no change is seen in the grid cell activity. This model thus allows for a contextual tuning of individual cells. Hayman and Jeffery modelled this proposal by simulating networks of grid cells which project to place cells and then altering the connection to each place cell in a context-dependent manner and found that it was in fact possible to slowly shift activity from one place field to another as the context was gradually altered.

To conclude, it appears that attractor dynamics is present throughout at least two systems responsible for some aspects of encoding space: the place cell and the grid cell systems. The ‘contextual gating’ model explains how the partial remapping occurs in the place cell system without a coinciding remapping in the grid cell system. Thus, it supports the hypothesis that two different attractor dynamics are present in the place and grid cell populations. The grid system preserves the continuous dynamics by maintaining their relative firing location, and although it directly influences the place cell activity, it is proposed to be further modulated by contextual inputs, which results in discrete attractor dynamics in the place cell system.

Here we have focused on reviewing the level of description of attractor networks, which may underlie possible technical abilities of computer simulations. Nevertheless, it is necessary to conduct further research where such networks can be trained on specific examples to enable them to implement memories of specific action patterns that an animal in an experimental room would use.

Glial network and glial-neural interactions

Similar to neurons, glial cells also organize networks and generate calcium oscillations and waves. Neural and glial networks overlap and interact in both directions. The mechanisms and functional role of calcium signaling in astrocytes have not yet been well understood. It is particularly hypothesized that their correlated or synchronized activity may coordinate neuronal firings in different spatial sites by the release of neuroactive chemicals.

Brain astrocytes were traditionally considered as the cells supporting neuronal activity. However, recent experimental findings have demonstrated that astrocytes may also actively participate in the information transmission processes in the nervous system. In contrast with neurons these cells are not electrically excitable but can generate chemical signals regulating synaptic transmission in neighboring synapses. Such regulation is associated with calcium pulses (or calcium spikes) inducing the release of neuroactive chemicals. The idea of astrocytes being important in addition to the pre- and post-synaptic components of the synapse has led to the concept of a tripartite synapse (Araque et al., 1999; Mazzanti et al., 2001). A part of the neurotransmitter released from the presynaptic terminals (i.e., glutamate) can diffuse out of the synaptic cleft and bind to metabotropic glutamate receptors (mGluRs) on the astrocytic processes that are located near the neuronal synaptic compartments. The neurotransmitter activates G-protein mediated signaling cascades that result in phospholipase C (PLC) activation and insitol-1,4,5-trisphosphaste (IP3) production. The IP3 binds to IP3-receptors in the intracellular stores and triggers Ca2+ release into the cytoplasm. Such an increase in intracellular Ca2+ can trigger the release of gliotransmitters (Parpura & Zorec, 2010) [e.g., glutamate, adenosine triphosphate (ATP), D-serine, and GABA] into the extracellular space.

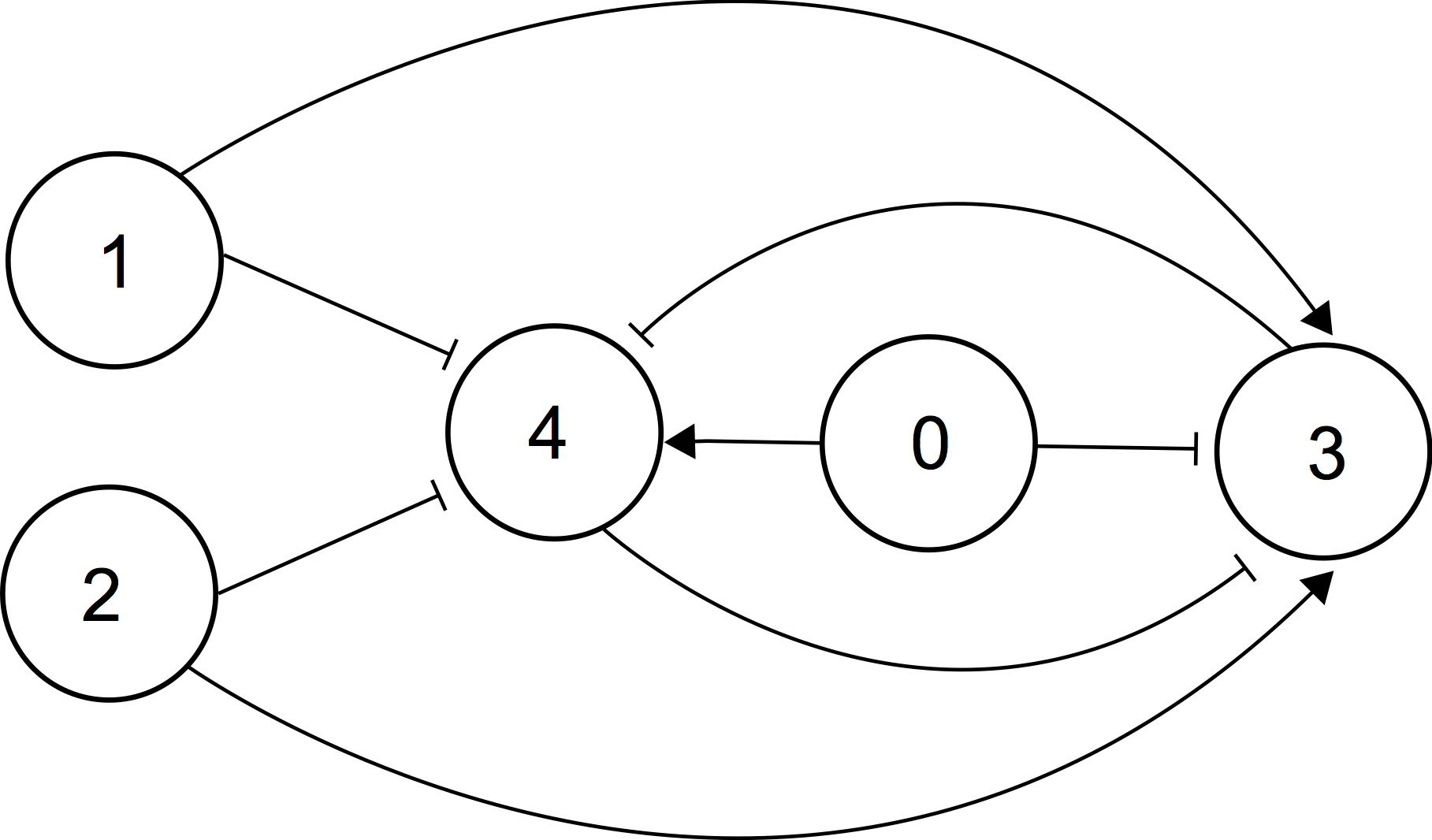

Figure 3. Scheme of an intracellular gene regulatory network able to perform linear classification (Bates et al., 2015). Each node represents a gene linked to the circuit by activatory or inhibitory connections. A classifier is based on the toggle switch organized by genes 3 and 4. Two inputs are genes 1 and 2. Gene 0 has a permanent basal expression, providing that gene 4 is initially in the state ON. Mutual action of genes 1 and 2 will either switch this state to OFF or not, providing the scheme can classify two inputs according to the binary classification. The expression of gene 0 sets the “threshold” of classification. If this threshold is “learned”, the whole genetic network will function as a perceptron.

A gliotransmitter can affect both the pre- and post-synaptic parts of the neuron. By binding to presynaptic receptors it can either potentiate or depress presynaptic release probability. In addition to presynaptic feedback signaling through the activation of astrocytes, there is feedforward signaling that targets the post synaptic neuron. Astrocytic glutamate induces slow inward postsynaptic currents (SICs) (Parpura & Haydon, 2000; Parri et al., 2001; Fellin et al., 2006). Thus, astrocytes may play a significant role in the regulation of neuronal network signaling by forming local elevations of gliotransmitters that can guide the excitation flow (Semyanov, 2008; Giaume et al., 2010). By the integration of neuronal synaptic signals, astrocytes provide a coordinated release of gliotransmitters affecting local groups of synapses from different neurons. This action may control the level of coherence in synaptic transmission in neuronal groups (for example, by means of above mentioned SICs). Moreover, astrocytes organize networks by means of gap junctions providing intercellular diffusion of active chemicals (Bennett et al., 2003) and may be able propagate such effect even further. In the extended case the intracellular propagation of calcium signals (calcium waves) have also attracted great interest (Cornell-Bell et al., 1990) when studying many physiological phenomena including calcium induced cell death and epileptic seizers (Nadkarni & Jung, 2003b). In some cases calcium waves can also be mediated by the extracellular diffusion of ATP between cells (Bennett et al., 2005). Gap junctions between astrocytes are formed by specific proteins (connexins CX43) which are permeable selectively to IP3 (Bennett et al., 2003). Thus, theoretically astrocytes may contribute to the regulation of neuronal activity between distant network sites.

Several mathematical models have been proposed to understand the functional role of astrocytes in neuronal dynamics: a model of the “dressed neuron,” which describes the astrocyte-mediated changes in neural excitability (Nadkarni & Jung, 2003a, 2007), a model of the astrocyte serving as a frequency selective “gatekeeper” (Volman et al., 2007), and a model of the astrocyte regulating presynaptic functions (De Pitta et al., 2011). It has been demonstrated that gliotransmitters can effectively control presynaptic facilitation and depression. In the following paper (Gordleeva et al., 2012) the researchers considered the basic physiological functions of tripartite synapses and investigated astrocytic regulation at the level of neural network activity. They illustrated how activations of local astrocytes may effectively control a network through combination of different actions of gliotransmitters (presynaptic depression and postsynaptic enhancement). A bi-directional frequency dependent modulation of spike transmission frequency in a network neuron has been found. A network function of the neuron implied a correlation between the neuron input and output reflecting the feedback formed by synaptic transmission pathways. The model of the tripartite synapse has recently been employed to demonstrate the functions of astrocytes in the coordination of neuronal network signaling, in particular, spike-timing-dependent plasticity and learning (Postnov et al., 2007; Amiri et al., 2011; Wade et al., 2011). In models of astrocytic networks, communication between astrocytes has been described as Ca2+ wave propagation and synchronization of Ca2+ waves (Ullah et al., 2006; Kazantsev, 2009). However, due to a variety of potential actions, that may be specific for brain regions and neuronal subtypes, the functional roles of astrocytes in network dynamics are still a subject of debate.

Figure 4. Scheme of an intracellular gene regulatory network able to perform associative learning (Bates et al., 2014): the nodes represent proteins, black lines represent activator (pointed arrow) and inhibitory (flat-ended arrow) transcriptional interactions. Square boxes stand for transcriptional factors whose production is not regulated within this circuit but is important to the regulation of the circular nodes.

Moreover, single astrocyte represents a spatially distributed system of processes and cell soma. Many experiments have shown that calcium transients thet appeared spontaneously in cell processes can propagate at very short distances comparable with the spatial size of the event itself (Grosche et al., 1999). This is not wave propagation in its classical sense. In other words, calcium events in astrocytes appear spatially localized. However, global events involving synchronously most of the astrocyte compartments occur from time to time. Interestingly, the statistics of calcium events satisfy the power law indicating that system dynamics develops in the mode of self-organized criticality (Beggs & Plenz, 2003; Wu et al., 2014). Still the origin and functional role of such spatially distributed calcium self-oscillations have not yet been clearly understood in terms of dynamical models.

Intracellular genetic intelligence

Neural networks in brains demonstrate intelligence, principles of which have been mathematically formulated in the study of artificial intelligence, starting from basic summating and associative perceptrons. In this sense, construction of networks with artificial intelligence has mimicked the function of neural networks. Principles of the brain functioning are not yet fully understood but it is without any doubt that perceptron intelligence based on the plasticity of intercellular connections is in one or another way implemented in the structure of the mammalian brain. But could this intelligence be implemented on a genetic level inside one neuron functioning as an intracellular perceptron? Are principles of artificial intelligence used by nature on a new, much smaller scale inside the brain cells?

As a proof-of-principle one can refer to early works where it was shown that a neural network can be built on the basis of chemical reactions just on molecular level (Hjelmfelt et al., 1991). Both simple networks and Turing machines can be implemented on this scale. It is noteworthy that the properties of intelligence, such as the ability to learn, cannot be considered separated from the evolutionary learning provided by genetic evolution. Following this paradigm Bray has demonstrated that a cellular receptor can be considered as a perceptron with weights learned via genetic evolution (Bray & Lay, 1994). Later Bray has also discussed the usage of proteins as computational elements in living cells (Bray, 1995). So the question arises: could neural network perception and computations be organized at the genetic level? Qian et al. have experimentally shown that it is possible, e.g. a Hopfield-type memory can be implemented with DNA gate architecture based on the DNA strand displacement cascades (Qian et al., 2011).

Let us review how intelligence can be implemented on the level of the genetic network inside each neuron. The most basic scheme of learning developed by Frank Rosenblatt refers to classification of stimuli (Fig. 2A), and it is known as a “perceptron” (Rosenblatt, 1958). In its simplest version a perceptron classifies points in a plane (space) according to whether they are above or below a line (hyperplane) (Fig. 2B), where each axis of the plane (space) represents a stimulus.

The classifier is given by the following function: \(f(\overrightarrow{x})=1 \) if \(\overrightarrow{w}\) \(\cdot\) \(\overrightarrow{x}\) \(+ b > 0\) \(f(\overrightarrow{x})=0 \) , otherwise. Here \(\overrightarrow{x}\) is a vector of inputs and \(\overrightarrow{w}\) stands for the vector of weights which can be ''learned''.

Hence, the perceptron makes a decision whether the weighted sum of the strength of the stimuli is above or below a certain threshold. Starting with a vector of random weights, a training set of input vectors and their desired outputs, the weight vector is adapted iteratively until the error in the number of classifications is less than a user-specified threshold or a certain number of iterations has been completed (Fig. 2B).

In Bates et al. (Bates et al., 2015) we have suggested a design of a genetic network able to make intelligent decisions to classify several external stimuli. The form in which intelligent decisions are taken is the ability to perform a linear classification task (see Fig. 3). The model is based on the design of Kaneko et al. (Suzuki et al., 2011) for an arbitrarily connected n-node genetic circuit. In this design the weightings by which the linear separation is performed are manifested in the strength of transcriptional regulation between certain node as seen in (Jones et al., 2007).

Another basic form of intelligent learning is represented by an associative perceptron. Association between two stimuli is best illustrated by the famous Pavlovian experiments, where a dog learns to associate the ringing of a bell with food. After simultaneous application of both stimuli, the dog learns to associate them, exhibiting the same response to either of the two stimuli alone.

One may further anticipate that associative learning is possible within the scale of one cell, as it was manifested by the experiments with amoeba anticipating periodic events (Saigusa et al., 2008). As proof-of-principle we refer to the pioneering work of Gandhi (Gandhi et al., 2007), who has formally shown that associative learning can occur on the intracellular scale, on the level of the chemical interactions between molecules. Later Jones et al. have shown that real genomic interconnections of the bacterium Eschericia coli can function as a liquid-state machine learning associatively how to respond to a wide range of environmental inputs (Jones et al., 2007). Most interestingly, in (Fernando et al., 2009) a scheme of the single-cell genetic circuit has been suggested, which can associatively learn association between two stimuli within the cellular life.

To illustrate this, let us consider the model of the associative genetic perceptron suggested in (Fernando et al., 2009), which is able to learn associatively in the manner of Pavlov’s dogs. The Pavlovian conditioning learning is the process in which a response typically associated with one stimulus can become associated with a second independent stimulus by repeated, simultaneous presentation of the two stimuli. After a sufficient amount of learning events (a simultaneous presentation of two stimuli) the presentation of the second stimulus should prompt a response by itself. A scheme of the intracellular gene regulatory circuit demonstrating this ability is shown in Fig. 4. This network is completely symmetric except for the fact that in the left part of the scheme with proteins u1, r1, w1, responsible for the main stimulus (as “meat”), the basal expression of w1 is always present, whereas in the right part of the scheme with proteins u2, r2, w2, responsible for the “bell” stimulus, initially there is no basal expression of w2, and a concentration of this protein is zero. The flat-headed arrow connecting the ui and ri molecules does not represent gene inhibition but the effect will be similar. What it represents is the fact that ui will bind with an ri molecule, thus preventing the ri molecule from inhibiting genes wi.

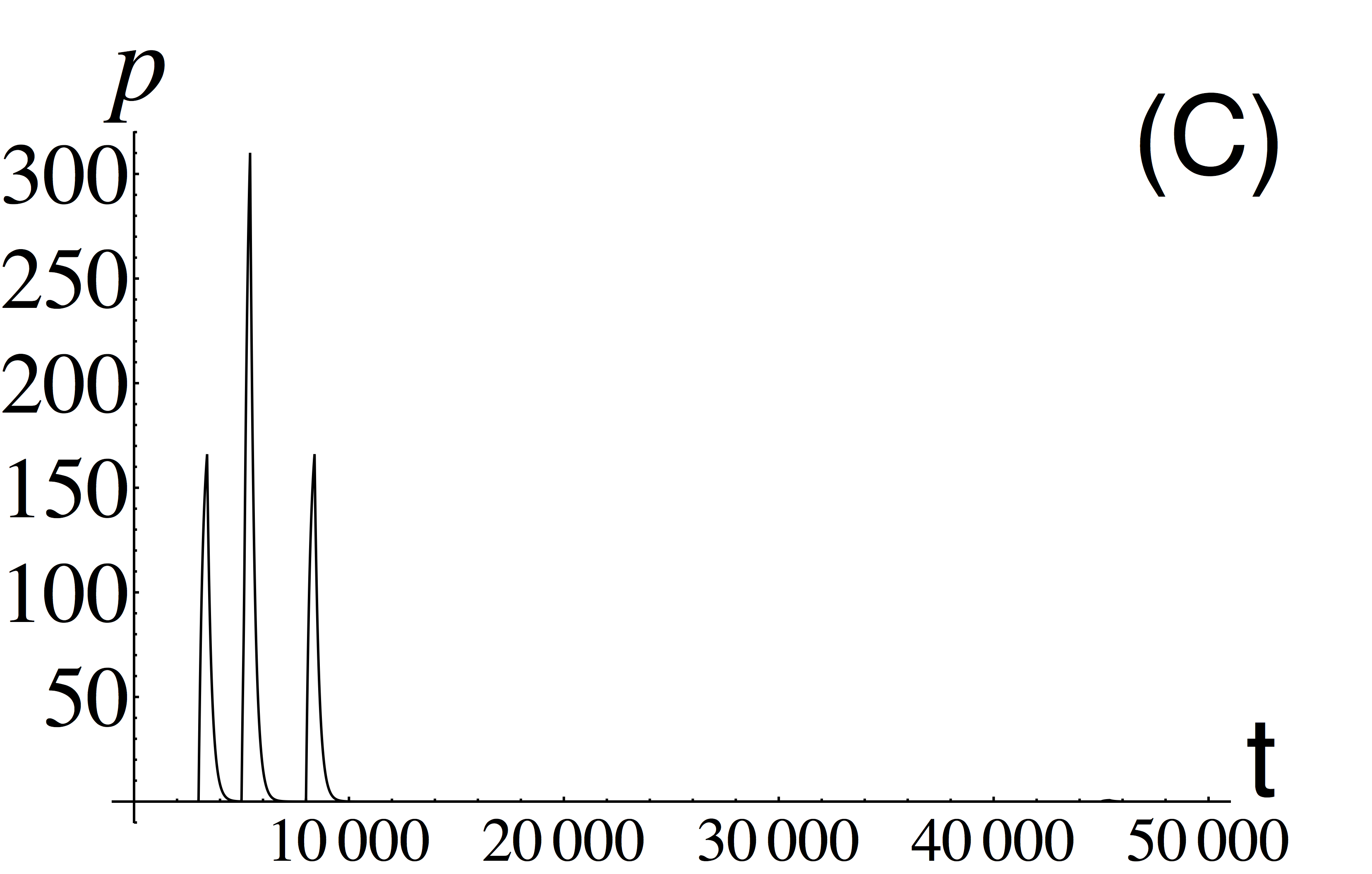

Without noise we can understand the dynamics more easily by simulating these genetic networks and analysing how the system responds to the “pulses” of inputs u1,2 at various points in time [see Figs. 5(a-c)]. This collection of images summarizes the dynamics of associative learning. Initially a pulse of u2 elicits no response from the system at t ≈ 1000; this is the conditioned stimulus. At t ≈ 3000 a pulse of u1 stimulates a response from the system, the unconditioned stimulus. At t ≈ 5000 we observe synchronized pulses of both u1 and u2; so at this point the association is “learned.” This is evidenced by a the sudden increase in w2 at this point. At t ≈ 8000 we see a single pulse of u2 eliciting a response in p on its own. Hence, the system has now “learnt” and has fundamentally changed its functionality.

In summary, we have discussed several designs of genetic networks illustrating the fact that intelligence, as it is understood in the science of artificial intelligence, can be built inside the cell, on the gene-regulating scale. Without any doubt, neurons or astrocytes, being a very sophisticated cells, use this possible functionality in one or another form. It is an intriguing question how learning and changes of weighting are implemented in the real genome of the neuron. We put forward the hypothesis that weights are implemented in the form of DNA methylation pattern, as a kind of long-term memory.

Medical applications

Neurodegenerative diseases are chronic diseases that are specific only to members of Homo sapiens; such diseases cause a progressive loss of functions, structure, and number of neural cells, which results in brain atrophy and cognitive impairment. The development of neurodegenerative diseases may vary greatly depending on their causes, be it an injury (arising from physical, chemical or infectious exposure), genetic background, a metabolic disorder, a combination of these factors, or an unknown cause available. The situation with multiple cellular and molecular mechanisms of neurodegeneration appears to be similar; their complexity often makes a single key mechanism impossible to identify. At the cellular level, neurodegenerative processes often involve anomaly in the processing of proteins, which trigger the accumulation of atypical proteins (both extra- and intracellularly), such as amyloid beta, tau and alpha-synuclein (Jellinger, 2008). Neurodegenerative processes bring about loss of homeostasis in the brain, which leads to the deterioration of structural and functional connectivity in neuronal networks, further aggravated by degradation in signal processing. Neurodegeneration begins with attenuation and imbalance of synaptic transmission, which influences the flow of information through the neural network. Functional disorders build up when neurodegeneration progresses, making synaptic contacts collapse, connections between cells change and neuronal subpopulations be lost in the brain. These structural and functional changes reflect general brain atrophy accompanied by cognitive deficiency (Terry, 2000; Selkoe, 2001; Knight & Verkhratsky, 2010; Palop & Mucke, 2010).

Figure 5. Learning the association of two stimuli by intracellular genetic perceptron. Plots of concentrations of inputs one (A) and two (B) and output (C) versus time. A system always reacts to an input one, but it will react to input two only after learning the association of two stimuli.

A traditional view on neurodegenerative diseases considers neurons as the main element responsible for their progression. Since the brain is a highly organized structure based on interconnected networks, neurons and glia, increasing evidence exists that glial, in particular, astrocytic cells as the main type of glial cells, do not only participate in providing important signaling functions of the brain, but also in its pathogenesis. Astrocytes, like neurons, form a network called syncytium consisting of intercellular gap junctions. Communication between cells is predominantly local (with the nearest neighbors), and the cells as such, according to experimental research, occupy specific areas that do not almost overlap. Such spatial arrangement and a unique morphology of astrocytes allow them not only to receive signals but also to have a significant impact on the activity of neural networks, both in physiological conditions and in the pathogenesis of the brain (Terry, 2000; Selkoe, 2001; Jellinger, 2008; Knight & Verkhratsky, 2010). Disturbance of such functional activity of astrocytes and their interaction with neuronal cells, as shown by recent studies, form the basis of a large spectrum of brain disorders (ischemia, epilepsy, schizophrenia, Alzheimer’s disease, Parkinson’s disease, Alexander’s disease, etc.) (Verkhratsky et al., 2015). Pathological changes in astroglia occurring in neurodegeneration include astroglial atrophy, morphological and functional changes and astrogliosis (Phatnani & Maniatis, 2015). These two mechanisms of pathological reactions are differently expressed at different stages of the neurodegeneration process; astroglia often loses its functions at the early stages of the disease, whereas specific damage (such as senile plaques) and neuronal death cause astrogliosis to develop at the advanced stages.

Thus, when developing new treatments, correcting pathological conditions and neurodegenerative disorders of the brain the role of glial networks interacting closely with neural networks should necessarily be taken into account, as the brain of higher vertebrates is an elaborate internetwork structure. Such an approach shall allow developing treatments for neurodegenerative diseases more accurately later on, and this will directly contribute to the development of medicine in general in the nearest future.

Discussion

A traditional view on the brain structure considers neurons as the main element responsible for their functionality. This network operates as a whole in an integrated way; thus the mammalian brain is an integrated neural network. However, we have also discussed it in our review that this network cannot be considered separately from the underlying and overlapping network of glial cells, as well as a molecular network inside each neuron. Hence, the mammalian brain appears to be a network of networks (Fig. 6) and should be investigated in the frame of an integrated approach considering an interaction of all these interconnected networks.

The function and structure of the complicated mammalian brain network can be understood only if investigated using the principles recently discovered in the theory of complex systems. This means following several concepts: i) using an integrated approach and taking integrated measures to characterize a system, e.g., integrated information; ii) investigating collective dynamics and properties emerging from the interaction of different elements; iii) considering complex and counterintuitive dynamical regimes in which a system demonstrates unexpected behaviour, e.g., noise-induced ordering or delayed bifurcations.

Concept of integrated information. First of all, one should use an integrated approach to prove that the complicated structure of the brain was motivated by the necessity of providing evolutionary advantages. We believe in the hypothesis that an additional spatial encoding of information provided by the astrocytes network and by intelligent networks inside each neuron maximizes the extent of integrated information generated by the interaction of neural and glial networks. From this point of view development of the neuro-glial interaction has increased fitness by improving information processing, and, hence, provided an evolutionary advantage for higher mammals. Indeed, the role of astrocytes in the processing of signals in the brain is not completely understood. We know that astrocytes add some kind of spatial synchronization to the time series generated by neurons. This spatial encoding of information processing occurs due to calcium events of the complex form determined by the astrocyte morphology. On one hand, the time scale of these events is large because calcium events develop much slower than transmission of information between neurons. On the other hand, if a calcium event has occurred, it is able to involve affected neurons almost instantaneously because it simultaneously interacts with all the neurons linked to this particular astrocyte. Additionally, we know that distribution of calcium events undergoes power law distribution, with a certain or a range of exponents, as observed in experiments.

Figure 6. Mammalian brain as network of networks. Neural layer shown schematically on the top interacts bi-directionally with a layer of astrocytes (green), which are coupled with a kind of diffusive connectivity. In its turn, each neuron (brown) from the neural network may contain a network at the molecular level that operaties according to the same principles as the neural network itself.

We assume that such systems of two interacting networks appeared evolutionarily because there was a need to maximize the integrated information generated. Importantly, a theory of integrated information has been developed to formalize the measure of consciousness that is a property of higher mammals.

Despite the long interest in the nature of self-consciousness (Sturm & Wunderlich, 2010) and information processing behind, until recently no formal measure has been introduced to quantify the level of consciousness. Recently, Giulio Tononi, a phychiatrist and neuroscientist from the University of Wisconsin-Madison formulated the Integrated Information theory, a framework intended to formalize and measure the level of consciousness. In his pioneering paper (Tononi et al., 1998) Tononi considered the brains of higher mammals as an extraordinary integrative device and introduced a notion of Integrated Information. Later this concept has been mathematically formalised in (Tononi, 2005, 2008; Balduzzi & Tononi, 2009; Tononi, 2012), and expanded to discrete systems (Balduzzi & Tononi, 2008). Other authors have suggested newly developed measures of integrated information applicable to practical measurements from time series (Barrett & Seth, 2011).

The theory of integrated information is a principled theoretical approach. It claims that consciousness corresponds to a system’s capacity to integrate information, and proposes a way to measure such capacity (Tononi, 2005). The integrated information theory can account for several neurobiological observations concerning consciousness, including: (i) the association of consciousness with certain neural systems rather than with others; (ii) the fact that neural processes underlying consciousness can influence or be influenced by neural processes that remain unconscious; (iii) the reduction of consciousness during dreamless sleep and generalized seizures; and (iv) the time requirements on neural interactions that support consciousness (Tononi, 2005). The measure of integrated information captures the information generated by the interactions among the elements of the system, beyond the sum of information generated independently by its parts. This can be provided by the simultaneous observation of two conditions: i) there is a large ensemble of different states, corresponding to different conscious experience; ii) each state if integrated, i.e., appears as a whole and cannot be decomposed into independent subunits. Hence, to understand the functioning of distributed networks representing the brain of higher mammals, it is important to characterize their ability to integrate information. To our knowledge, nobody has applied the measures of integrated information to neural networks with both, temporal and spatial encoding of information, and, hence, nobody has utilized this approach to illustrate the role of astrocyte in neural-astrocyte network processing of information.

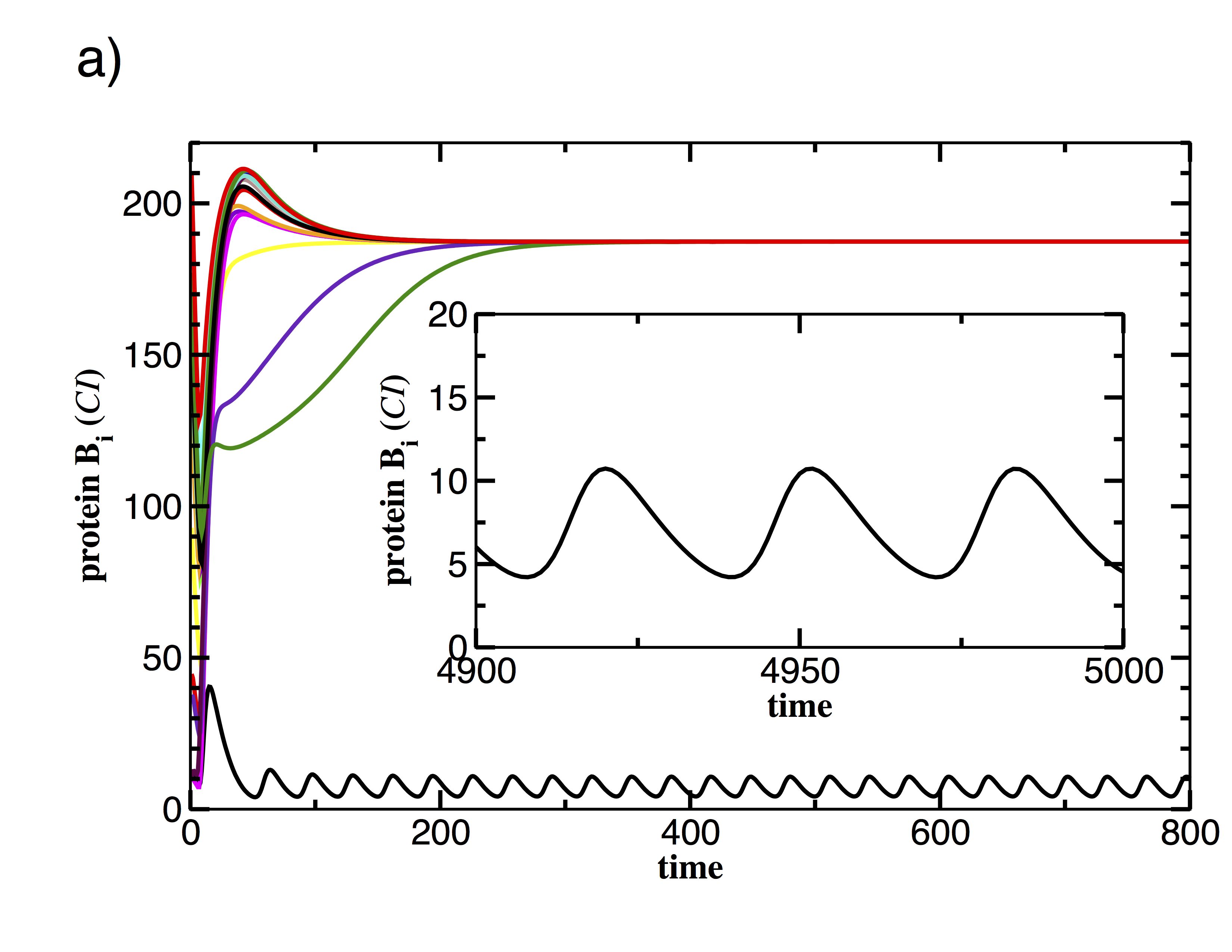

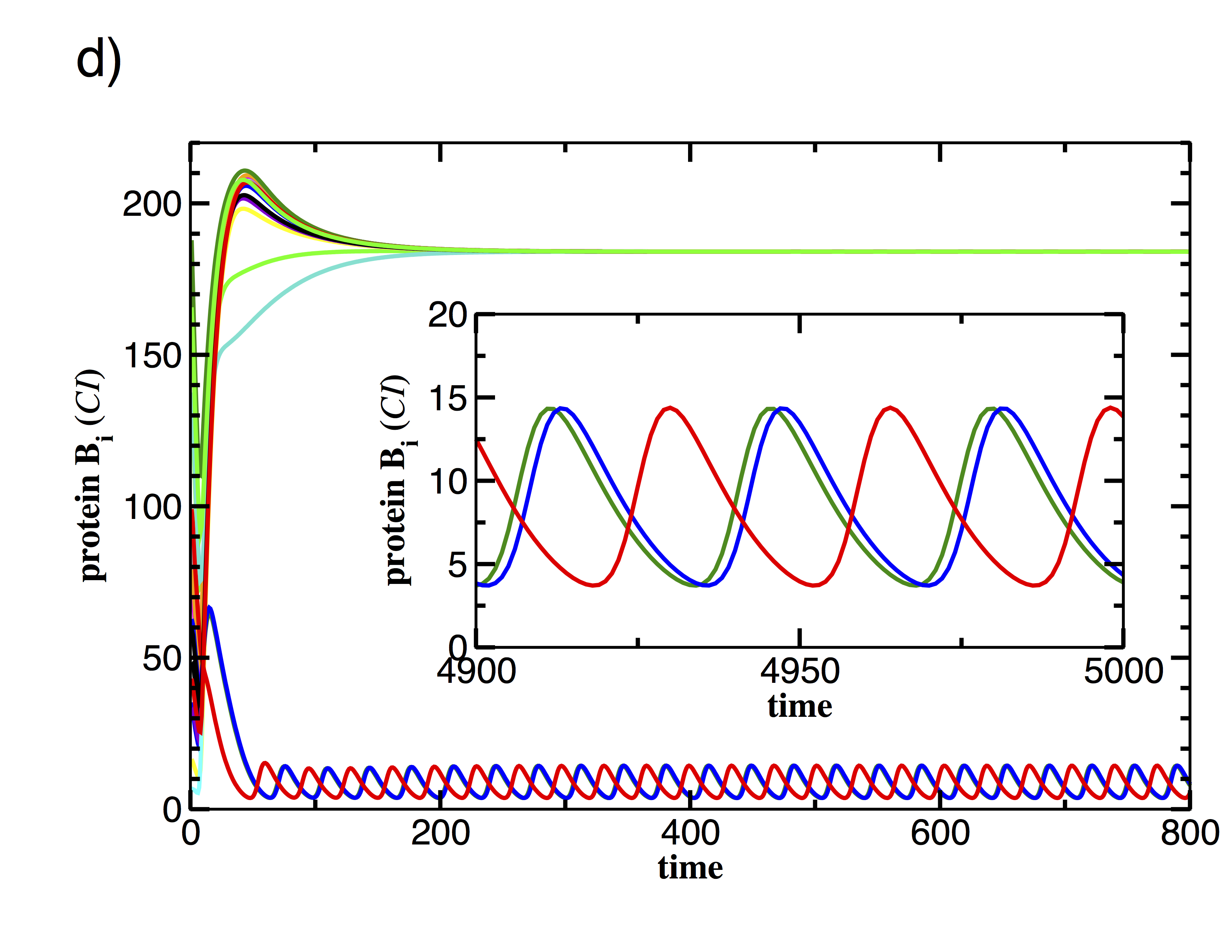

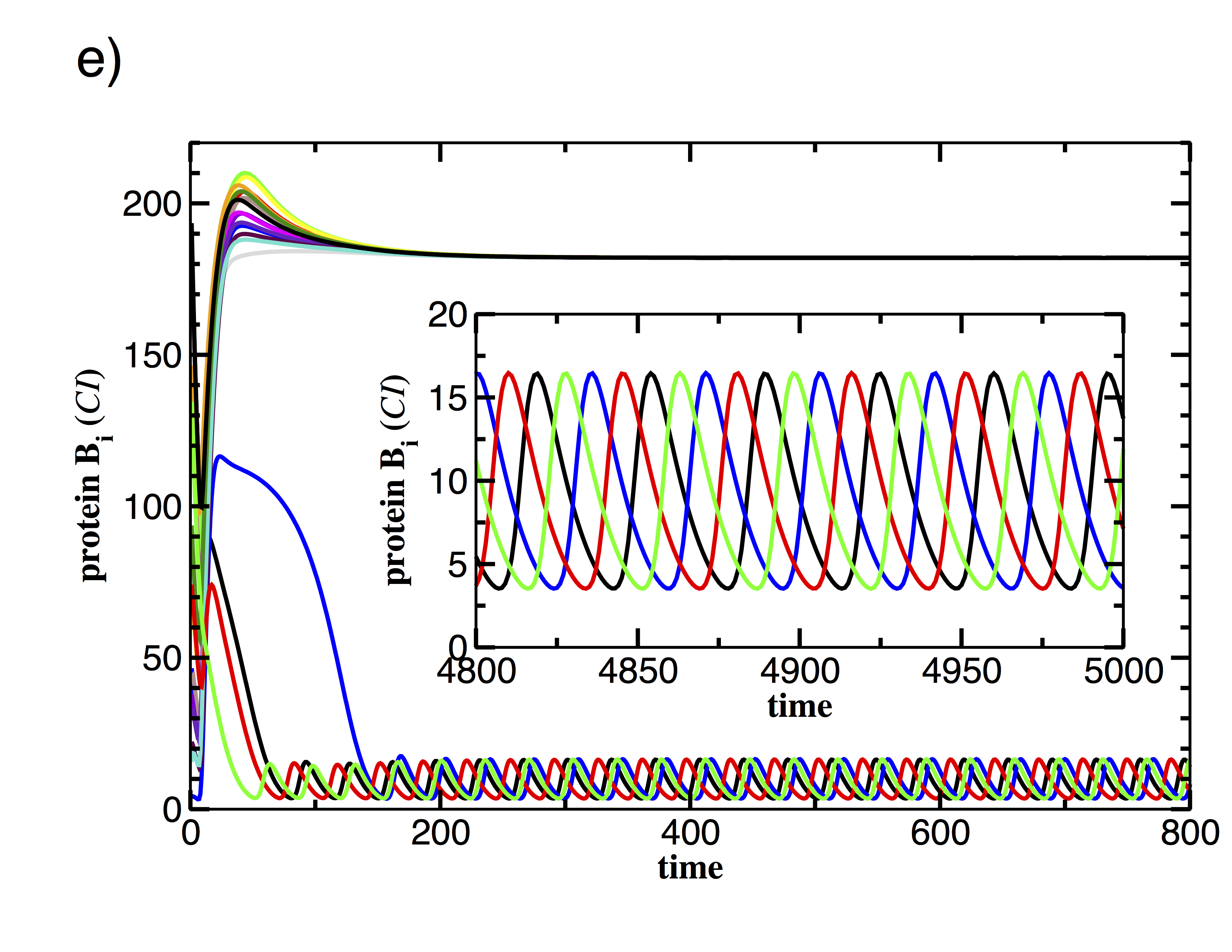

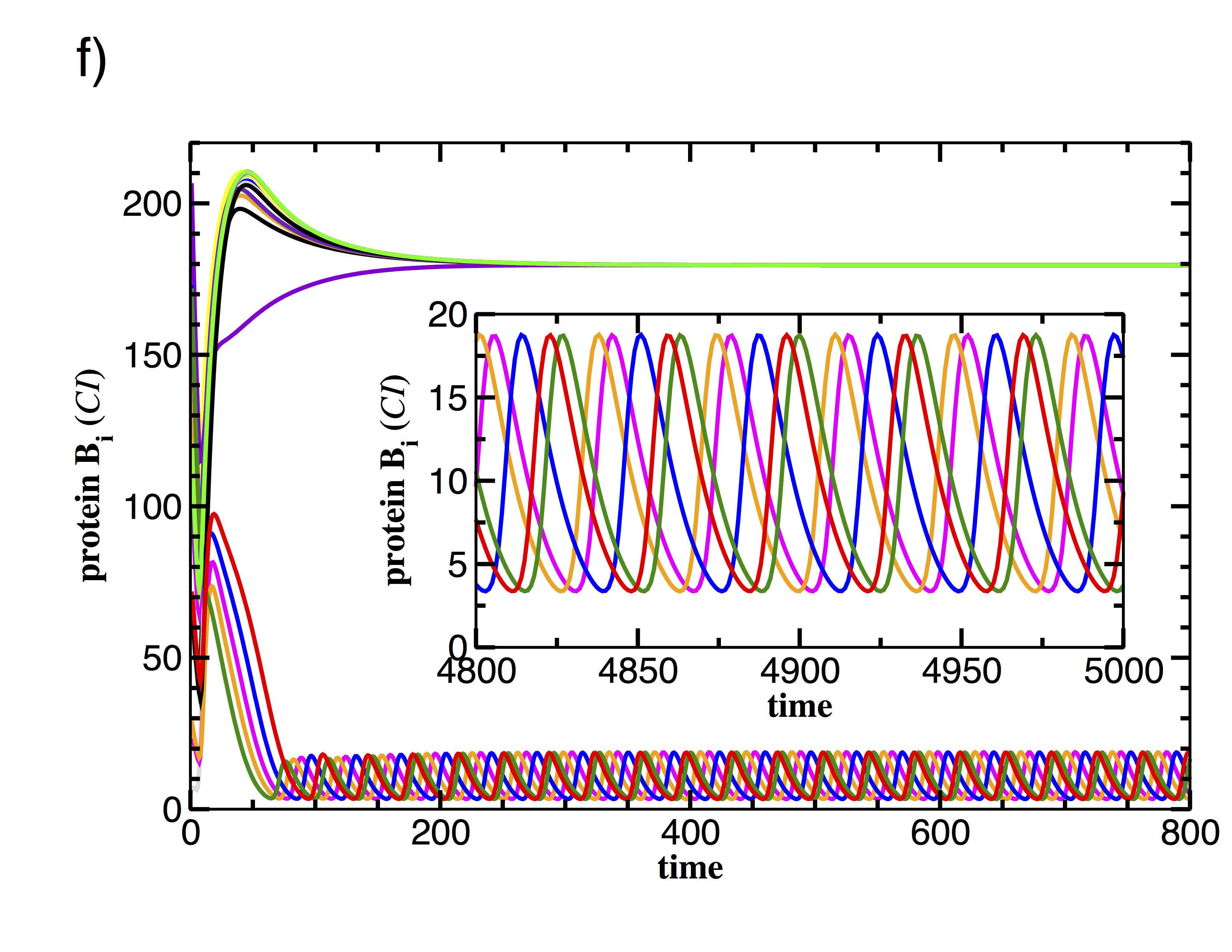

Figure 7. Examples of time-series showing CI protein concentrations for N=18 repressilators for the same conditions, i.e. identical parameter sets. The realizations differ only by the initial conditions leading to different cluster formations.

Emergence of collective dynamics. Complex systems represented by a network of simple interacting elements can demonstrate dynamical and multistable regimes with properties not possessed by single elements. Without any doubt, the mammalian brain as a network of networks may demonstrate plenty of these unexpected regimes, and it would be impossible to understand its function without the methodology recently developed in nonlinear dynamics. Let us illustrate this point with a system of repressilators with cell-to-cell communication as a prototype for complex behaviour emerging only due to interaction. We have chosen a simple genetic oscillator, repressilator, for this purpose, because it includes only three genes able to function independently from the rest of cellular machinery, and, hence, demonstrating relatively simple dynamics as a single element. The motif is built by three genes, where the protein product of each gene represses the expression of another in a cyclic manner (Elowitz & Leibler, 2000). This network is widely found in natural genetic networks such as the genetic clock (Purcell et al., 2010; Hogenesch & Ueda, 2011; Pokhilko et al., 2012). Cell-to-cell communication was introduced to the repressilator to reduce the noisy protein expression and build a reliable synthetic genetic clock (Garcia-Ojalvo et al., 2004). The additional quorum sensing the feedback loop is based on the Lux quorum sensing mechanism and can be connected to the basic repressilator in such a way that it reinforces the oscillations of the repressilator or competes with the overall negative feedback of the repressilator. The first one leads to phase attractive coupling for robust synchronized oscillations (Garcia-Ojalvo et al., 2004), whereas the latter one evokes phase-repulsive influence (Volkov & Stolyarov, 1991). Phase repulsive coupling is the key to multi-stability and rich dynamics including chaotic oscillations (Ullner et al., 2007; Ullner et al., 2008; Koseska et al., 2010) in the repressilator network. Only a single rewiring in the connection between the basic repressilator and the additional quorum sensing feedback loop is sufficient to alter the entire dynamics of the cellular population. As a consequence, the in-phase regime becomes unstable and phase-repulsive dynamics dominates. This phenomenon is common in several biological systems, including the morphogenesis in Hydra regeneration and animal coat pattern formation (Meinhardt, 1982; Meinhardt, 1985), the jamming avoidance response in electrical fish (Glass & Mackey, 1988), the brain of songbirds (Laje & Mindlin, 2002), neural activity in the respiratory system (Koseska et al., 2007), and signal processing in neuronal systems (Tessone et al., 2006).

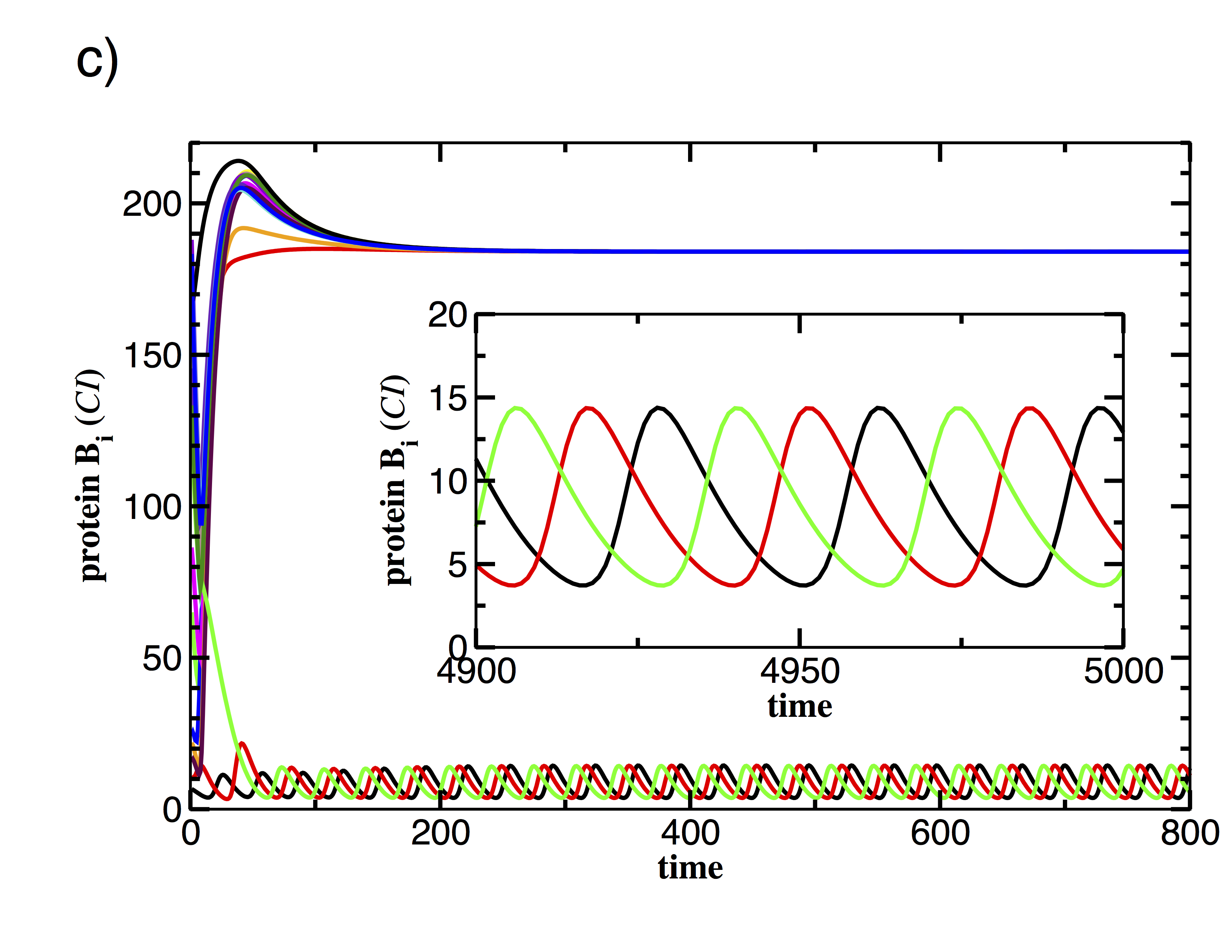

In the following work we discuss only multi-stability and chaotic dynamics to show the example of the complex dynamics evoked by simple isogenetic synthetic genetic network communicating via quorum sensing. Genetic heterogeneity, noise, more sophisticated coupling schemes including e.g. network of network architecture, hierarchies or time delay, would be additional significant sources to add more complexity. For a detailed description we refer to the review (Borg et al., 2014). Two coupled repressilators express a rich and multi-stable behaviour already including five different dynamical regimes: self-sustained oscillatory solutions, inhomogeneous limit cycles (IHLC), inhomogeneous steady states (IHSS), homogeneous steady states (HSS) and chaotic oscillations, all of which exist for biologically realistic parameter ranges. Example time series can be found in Figure 3 in (Borg et al., 2014). Both inhomogeneous solutions (IHLC and IHSS) are interesting in that they show different behaviour of isogenetic cells in the same environment. One cell maintains a high level of a particular protein (CI) whereas the other cell keeps a low concentration of that protein, which in turn implies a high concentration of another protein (LacI). Both cells are able to specialize as a LacI or CI producer, and only the history (initial condition in the numerical experiments) determines the state. Both inhomogeneous states are combined states and differ from a bistable system in that each protein level cannot be occupied independently. Increasing the system size widen the range of possible dynamical regimes and enhance the flexibility of the cell colony. The realizations shown in Fig. 7 illustrate the rich range of possible stable solutions.

All the 18 repressilators are identical and are under the same environmental conditions. Only different initial conditions determine the particular expressed behaviour of the individual cell. Note that different distributions between the high and low state affect the averaged protein levels, the frequency and the phase relation. Further on, the probability to switch from a homogenous solution to inhomogeneous solutions (IHLC or IHSS) in a growing colony of repressilators increases and makes it more likely to find a large cell colony in a state differentiated from that in a small one. These findings support the idea to use the repressilator with repressive cell–cell communication as a prototype of artificial cell differentiation in synthetic biology. The cell differentiation is also closely related to the community effect in development (Saka et al., 2011).

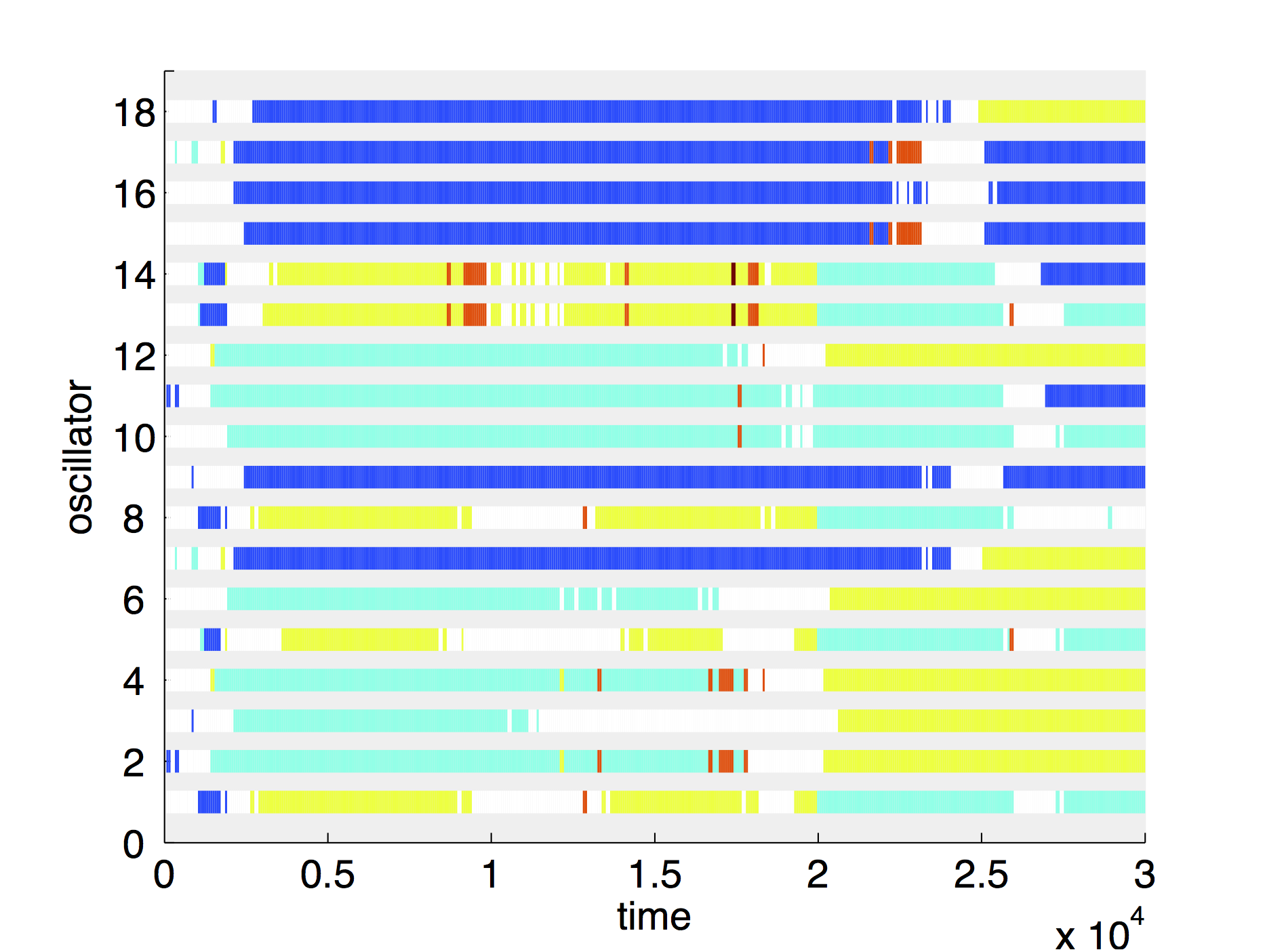

Figure 8 shows weak chaotic dynamics of N = 18 oscillators, with long three- and four-grouping constellations. To visualise the grouping we introduce a colour coding to mark oscillators with very similar behaviour over some time with the same colour in the cluster plot. Figure 8 (d) illustrates in a cluster lot the interplay of long-time grouping and recurring transients with less ordered states while a rearrangement to a new grouping happens. The groupings last up to 20,000 time units, i.e., about 4000 cycles. Once the oscillators are distributed in a long living grouping state, they oscillate synchronously within the group and cannot be distinguished by their time series until the next decomposition occurs and spreads the phases.

Although the chaotic dynamics observed here and the effect of intrinsic noise in synthetic oscillators (Elowitz & Leibler, 2000; Stricker et al., 2008) have very similar manifestations despite different origins, we demonstrate that chaos is an alternative source of uncertainty in genetic networks. The chaotic dynamics and the grouping phenomena appear gradually for increasing coupling, i.e., at cell densities, which can be a cause of stress. One could speculate that the population has the flexibility to respond to and survive environmental stress by distributing its cells within stable clusters. The gradual chaotic behaviour enables the population to adapt the mixing velocity and the degree of diversity to the stress conditions.

Complex and counterintuitive dynamics as a result of nonlinearity. Recent advances in nonlinear dynamics have provided us with surprising and unexpected complex behaviour as a result of nonlinearity possessed by the dynamical systems. One should certainly note here such effects as appearance of deterministic chaos (Lorenz, 1963; Sklar, 1997) and a huge variety of different manifestations of synchronization (Rosenblum et al., 1996; Pikovsky et al., 2001). Here, however, as more relevant to neural genetic dynamics we will discuss phenomena of noise-induced ordering and delayed bifurcations.

Figure 8. Chaotic behaviour seen in (a), (b), (c) time series of the same system of repressilators taken at different time-points and (d) the corresponding cluster plot over the entire time span in the self-oscillatory regime of N = 18 oscillators with weak chaotic behaviour and long lasting grouping. The four figures have a matching colour coding.

Recently, it has been demonstrated that gene expression is genuinely a noisy process (McAdams & Arkin, 1999). Stochasticity of a gene expression, both intrinsic and extrinsic, has been experimentally measured, e.g., in Ref. (Elowitz et al., 2002), and modelled either with stochastic Langevin-type differential equations or with Gillespie-type algorithms to simulate the single chemical reactions underlying this stochasticity (Gillespie et al., 2013). Naturally, the question arises as to what the fundamental role of noise in intracellular intelligence is. Can stochastic fluctuations only corrupt the information processing in the course of learning or can they also help cells to “think”? Indeed, it has recently been shown that noise can counterintuitively lead to ordering in nonlinear systems under certain conditions, e.g., in the effect of stochastic resonance (SR) (Gammaitoni et al., 1998), which has found many manifestations in biological systems, in particular to improve the hunting abilities of the paddlefish (Russell et al., 1999), to enhance human balance control (Priplata et al., 2003), to help brain’s visual processing (Mori & Kai, 2002), or to increase the speed of memory retrieval (Usher & Feingold, 2000).

Our investigations into the role of noise in the functioning of the associative perceptron have shown a significant improvement in two different measures of functionality due to noise (Bates et al., 2014). In the first instance we saw a marked improvement in the likelihood of eliciting a response from an input out of the memory range of the nonnoisy system. Second, we noticed an increase in the effectiveness also when considering the ability to repeatedly respond to inputs. In both cases there was a stochastic resonance bell curve demonstrating an optimal level of noise for the task. The same principles appeared to be working for summating perceptrons. In (Bates et al., 2015) we studied the role of genetic noise in intelligent decision making at the genetic level and showed that noise can play a constructive role helping cells to make the right decision. To do this, we have outlined a design by which linear separation can be performed genetically on the concentrations of certain input protein concentrations. Considering this system in a presence of noise we observed, demonstrated and quantified the effect of stochastic resonance in linear classification systems with input noise and threshold spoiling.

Another surprising effect has been reported by us in (Nene et al., 2012) and discussed in (Ashwin & Zaikin, 2015). It is particularly relevant to the genetic dynamics inside the neuron, because the ability of a neuron to classify inputs can be linked to the function of the genetic switch. When external signals are sufficiently symmetric, the circuit may exhibit bistability, which is associated with two distinct cell fates chosen with equal probability because of noise involved in gene expression. If, however, input signals provide transient asymmetry, the switch will be biased by the rate of external signals. The effect of speed-dependent cellular decision-making can be observed (Ashwin et al., 2012; Ashwin & Zaikin, 2015) in which slow and fast decisions will result in a different probability to choose the corresponding cell fate. The speed at which the system crosses a critical region strongly influences the sensitivity to transient asymmetry of the external signals. For high speed changes, the system may not notice a transient asymmetry but for slow changes, bifurcation delay may increase the probability of one of the states being selected (Ashwin et al., 2012). In (Palau-Ortin et al., 2015) these effects have also been extended to the pattern formation.

How these different dynamical regimes in neuroscience are responsible for the certain brain functionality is an open question (Rabinovich et al., 2006). It is also not clear how these different dynamical regimes are linked to the information flow dynamics in the brain (Rabinovich et al., 2012). It is important to note that in recent works on attractor networks, synaptic dynamics (synaptic depression) was seen to convert attractor dynamics in neural network to heteroclinic dynamics - with solutions passing from vicinity of one "attractor ghost" to another and showing various time scales (Rabinovich et al., 2014). This has been suggested as a mechanism for a number of functional effects: from transient memories to long-term integration to slow-fast pseudo-periodic oscillations. Here it is worth to mention that probably recently discovered chimera type dynamics can be found in the brain and be responsible for certain functionality (Panaggio & Abrams, 2015). It is an intriguing question what role gila might play in controlling the potential passage of network dynamics between different dynamical regimes: we may speculate that this happens due to the fact that glia control transient changes of network topology.

In this review we emphasized that mammalian brain works as a network of interconnected subnetworks. We further discussedd that neurons interact with glial cells in a network like manner, potentially giving rise to some of complicated behaviour of higher mammals. One supporting neurobiological evidence for our claim comes from the recent discovery that the myelin sheath that functions to provide faster signal transmission between the neurons and is formed by the actions of oligodendorocytes, a type of the glial cells, is not uniform across the brain structures and much less myelin is found in the higher levels of the cerebral cortex, which is the most recently evolved part (Fields, 2014). The researcher suggested that as the neuronal diversity increases in the more evolved structures of the cerebral cortex, the neurons utilize the myelin to achieve the most efficient and complex behaviour that is only present in the higher-level mammals. Thus, our speculations are consistent with some of the recent findings, suggesting that glial cells and neurons are conducting a complex conversation to achieve the most efficient function. Furthermore, here we suggest that an intracellular network exists on a genetic level inside each neuron, and its function is based on the principle of artificial intelligence. Hence, we propose that the mammalian brain is, in fact, a network of networks and suggest that future research should aim to incorporate the genetic, neuronal and glial networks to achieve a more comprehensive understanding of how the brain does its complex operations to give rise to the most enigmatic thing of all-the human mind.

| Attachment | Size |

|---|---|

| 1.8 MB |

AZ, SG and AL acknowledge support from the Russian Science Foundation (16-12-00077). Authors thank T. Kuznetsova for Fig. 6.

AMIRI M., BAHRAMI F. & JANAHMADI M. (2011). Functional modeling of astrocytes in epilepsy: a feedback system perspective. Neural Comput Appl 20, 1131-1139.

ARAQUE A., PARPURA V., SANZGIRI R.P. & HAYDON P.G. (1999). Tripartite synapses: glia, the unacknowledged partner. Trends in Neurosciences 22, 208-215.

ASHWIN P., WIECZOREK S., VITOLO R. & COX P. (2012). Tipping points in open systems: bifurcation, noise-induced and rate-dependent examples in the climate system. Philos Trans A Math Phys Eng Sci 370, 1166-1184.

ASHWIN P. & ZAIKIN A. (2015). Pattern selection: the importance of «how you get there». Biophysical journal 108, 1307-1308.

BALDUZZI D. & TONONI G. (2008). Integrated information in discrete dynamical systems: Motivation and theoretical framework. PLoS computational biology 4.

BALDUZZI D. & TONONI G. (2009). Qualia: The Geometry of Integrated Information. PLoS computational biology 5.

BARRETT A.B. & SETH A.K. (2011). Practical Measures of Integrated Information for Time-Series Data. PLoS computational biology 7.

BATES R., BLYUSS O., ALSAEDI A. & ZAIKIN A. (2015). Effect of noise in intelligent cellular decision making. PloS one 10, e0125079.

BATES R., BLYUSS O. & ZAIKIN A. (2014). Stochastic resonance in an intracellular genetic perceptron. Physical review E, Statistical, nonlinear, and soft matter physics 89, 032716.

BEGGS J.M. & PLENZ D. (2003). Neuronal avalanches in neocortical circuits. The Journal of neuroscience : the official journal of the Society for Neuroscience 23, 11167-11177.

BENNETT M.R., FARNELL L. & GIBSON W.G. (2005). A quantitative model of purinergic junctional transmission of calcium waves in astrocyte networks. Biophysical journal 89, 2235-2250.

BENNETT M.V., CONTRERAS J.E., BUKAUSKAS F.F. & SAEZ J.C. (2003). New roles for astrocytes: gap junction hemichannels have something to communicate. Trends Neurosci 26, 610-617.

BLISS T.V. & LOMO T. (1973). Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. The Journal of physiology 232, 331-356.

BORG Y., ULLNER E., ALAGHA A., ALSAEDI A., NESBETH D. & ZAIKIN A. (2014). Complex and unexpected dynamics in simple genetic regulatory networks. Int J Mod Phys B 28.

BRAY D. (1995). Protein molecules as computational elements in living cells. Nature 376, 307-312.

BRAY D. & LAY S. (1994). Computer simulated evolution of a network of cell-signaling molecules. Biophysical journal 66, 972-977.

CORNELL-BELL A.H., FINKBEINER S.M., COOPER M.S. & SMITH S.J. (1990). Glutamate induces calcium waves in cultured astrocytes: long-range glial signaling. Science 247, 470-473.

DE ALMEIDA L., IDIART M. & LISMAN J.E. (2009). The Input-Output Transformation of the Hippocampal Granule Cells: From Grid Cells to Place Fields. Journal of Neuroscience 29, 7504-7512.

DE PITTA M., VOLMAN V., BERRY H. & BEN-JACOB E. (2011). A Tale of Two Stories: Astrocyte Regulation of Synaptic Depression and Facilitation. PLoS computational biology 7.

ELOWITZ M.B. & LEIBLER S. (2000). A synthetic oscillatory network of transcriptional regulators. Nature 403, 335-338.

ELOWITZ M.B., LEVINE A.J., SIGGIA E.D. & SWAIN P.S. (2002). Stochastic gene expression in a single cell. Science 297, 1183-1186.

FELLIN T., PASCUAL O. & HAYDON P.G. (2006). Astrocytes coordinate synaptic networks: Balanced excitation and inhibition. Physiology 21, 208-215.

FERNANDO C.T., LIEKENS A.M., BINGLE L.E., BECK C., LENSER T., STEKEL D.J. & ROWE J.E. (2009). Molecular circuits for associative learning in single-celled organisms. Journal of the Royal Society, Interface / the Royal Society 6, 463-469.

FIELDS R.D. (2014). Myelin-More than Insulation. Science 344, 264-266.

FYHN M., HAFTING T., TREVES A., MOSER M.B. & MOSER E.I. (2007). Hippocampal remapping and grid realignment in entorhinal cortex. Nature 446, 190-194.

GAMMAITONI L., HANGGI P., JUNG P. & MARCHESONI F. (1998). Stochastic resonance. Reviews of Modern Physics 70, 223-287.

GANDHI N., ASHKENASY G. & TANNENBAUM E. (2007). Associative learning in biochemical networks. Journal of theoretical biology 249, 58-66.

GARCIA-OJALVO J., ELOWITZ M.B. & STROGATZ S.H. (2004). Modeling a synthetic multicellular clock: repressilators coupled by quorum sensing. Proceedings of the National Academy of Sciences of the United States of America 101, 10955-10960.

GIAUME C., KOULAKOFF A., ROUX L., HOLCMAN D. & ROUACH N. (2010). NEURON-GLIA INTERACTIONS Astroglial networks: a step further in neuroglial and gliovascular interactions. Nature Reviews Neuroscience 11, 87-99.

GILLESPIE D.T., HELLANDER A. & PETZOLD L.R. (2013). Perspective: Stochastic algorithms for chemical kinetics. The Journal of chemical physics 138, 170901.

GLASS L. & MACKEY M.C. (1988). From clocks to chaos: the rhythms of life. Princeton University Press, Princeton.

GORDLEEVA S.Y., STASENKO S.V., SEMYANOV A.V., DITYATEV A.E. & KAZANTSEV V.B. (2012). Bi-directional astrocytic regulation of neuronal activity within a network. Front Comput Neurosci 6, 92.

GRANGER R. (2011). How brains are built: Principles of computational neuroscience. Cerebrum.

GROSCHE J., MATYASH V., MOLLER T., VERKHRATSKY A., REICHENBACH A. & KETTENMANN H. (1999). Microdomains for neuron-glia interaction: parallel fiber signaling to Bergmann glial cells. Nature neuroscience 2, 139-143.

HAFTING T., FYHN M., MOLDEN S., MOSER M.B. & MOSER E.I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801-806.

HAYMAN R.M., CHAKRABORTY S., ANDERSON M.I. & JEFFERY K.J. (2003). Context-specific acquisition of location discrimination by hippocampal place cells. Eur J Neurosci 18, 2825-2834.

HAYMAN R.M & JEFFERY K.J. (2008). How Heterogeneous Place Cell Responding Arises From Homogeneous Grids-A Contextual Gating Hypothesis. Hippocampus 18, 1301-1313.

HEBB D.O. (1949). The organization of behavior: a neuropsychological theory. Wiley, New York.

HJELMFELT A., WEINBERGER E.D. & ROSS J. (1991). Chemical implementation of neural networks and Turing machines. Proceedings of the National Academy of Sciences of the United States of America 88, 10983-10987.

HOGENESCH J.B. & UEDA H.R. (2011). Understanding systems-level properties: timely stories from the study of clocks. Nature reviews Genetics 12, 407-416.

JEFFERY K.J. (2011). Place Cells, Grid Cells, Attractors, and Remapping. Neural Plast.

JELLINGER K.A. (2008). Neuropathological aspects of Alzheimer disease, Parkinson disease and frontotemporal dementia. Neurodegener Dis 5, 118-121.

JONES B., STEKEL D.J., ROWE J.E. & FERNANDO C.T. (2007). Is there a Liquid State Machine in the Bacterium Escherichia Coli? . In: Proceedings of IEEE Symposium on Artificial Life, 187-191.

KANDEL E.R., SCHWARTZ J.H. & JESSELL T.M. (2000). Principles of neural science. McGraw-Hill, New York, NY ; London.

KAZANTSEV V.B. (2009). Spontaneous calcium signals induced by gap junctions in a network model of astrocytes. Physical review E, Statistical, nonlinear, and soft matter physics 79, 010901.

KNIGHT R.A. & VERKHRATSKY A. (2010). Neurodegenerative diseases: failures in brain connectivity? Cell Death Differ 17, 1069-1070.

KOSESKA A., ULLNER E., VOLKOV E., KURTHS J. & GARCIA-OJALVO J. (2010). Cooperative differentiation through clustering in multicellular populations. Journal of theoretical biology 263, 189-202.

KOSESKA A., VOLKOV E., ZAIKIN A. & KURTHS J. (2007). Inherent multistability in arrays of autoinducer coupled genetic oscillators. Phys Rev E 75.

LAJE R. & MINDLIN G.B. (2002). Diversity within a birdsong. Physical review letters 89.

LEUTGEB J.K., LEUTGEB S., TREVES A., MEYER R., BARNES C.A., MCNAUGHTON B.L., MOSER M.B. & MOSER E.I. (2005). Progressive transformation of hippocampal neuronal representations environments in «Morphed». Neuron 48, 345-358.

LORENZ E.N. (1963). Deterministic Nonperiodic Flow. J Atmos Sci 20, 130-141.

MALENKA R.C. & BEAR M.F. (2004). LTP and LTD: An embarrassment of riches. Neuron 44, 5-21.

MAZZANTI M., SUL J.Y. & HAYDON P.G. (2001). Glutamate on demand: Astrocytes as a ready source. The Neuroscientist: a review journal bringing neurobiology, neurology and psychiatry 7, 396-405.

MCADAMS H.H. & ARKIN A. (1999). It’s a noisy business! Genetic regulation at the nanomolar scale. Trends Genet 15, 65-69.

MCCULLOCH W.S. & PITTS W. (1990). A Logical Calculus of the Ideas Immanent in Nervous Activity (Reprinted from Bulletin of Mathematical Biophysics, Vol 5, Pg 115-133, 1943). B Math Biol 52, 99-115.

MEINHARDT H. (1982). Models of biological pattern formation. Academic Press, London ; New York.

MEINHARDT H. (1985). Models of Biological Pattern-Formation in the Development of Higher Organisms. Biophysical journal 47, A355-A355.

MIMMS C. (2010). Why Synthesized Speech Sounds So Awful. http://wwwtechnologyreviewcom/view/420354/why-synthesized-speech-sounds-....

MORI T. & KAI S. (2002). Noise-induced entrainment and stochastic resonance in human brain waves. Physical review letters 88, 218101.

NADKARNI S. & JUNG P. (2003a). Spontaneous oscillations of dressed neurons: A new mechanism for epilepsy? Physical review letters 91.

NADKARNI S. & JUNG P. (2003b). Spontaneous oscillations of dressed neurons: a new mechanism for epilepsy? Physical review letters 91, 268101.

NADKARNI S. & JUNG P. (2007). Modeling synaptic transmission of the tripartite synapse. Physical biology 4, 1-9.

NENE N.R., GARCA-OJALVO J. & ZAIKIN A. (2012). Speed-dependent cellular decision making in nonequilibrium genetic circuits. PloS one 7, e32779.

PALAU-ORTIN D., FORMOSA-JORDAN P., SANCHO J.M. & IBANES M. (2015). Pattern selection by dynamical biochemical signals. Biophysical journal 108, 1555-1565.

PALOP J.J. & MUCKE L. (2010). Amyloid-beta-induced neuronal dysfunction in Alzheimer’s disease: from synapses toward neural networks. Nature neuroscience 13, 812-818.

PANAGGIO M.J. & ABRAMS D.M. (2015). Chimera states: coexistence of coherence and incoherence in networks of coupled oscillators. Nonlinearity 28, R67-R87.

PARPURA V. & HAYDON P.G. (2000). Physiological astrocytic calcium levels stimulate glutamate release to modulate adjacent neurons. Proceedings of the National Academy of Sciences of the United States of America 97, 8629-8634.

PARPURA V. & ZOREC R. (2010). Gliotransmission: Exocytotic release from astrocytes. Brain Res Rev 63, 83-92.

PARRI H.R., GOULD T.M. & CRUNELLI V. (2001). Spontaneous astrocytic Ca2+ oscillations in situ drive NMDAR-mediated neuronal excitation. Nature neuroscience 4, 803-812.

PHATNANI H. & MANIATIS T. (2015). Astrocytes in neurodegenerative disease. Cold Spring Harbor perspectives in biology 7.

PIKOVSKY A., ROSENBLUM M. & KURTHS J. (2001). Synchronization: a universal concept in nonlinear sciences. Cambridge University Press, Cambridge.

POKHILKO A., FERNANDEZ A.P., EDWARDS K.D., SOUTHERN M.M., HALLIDAY K.J. & MILLAR A.J. (2012). The clock gene circuit in Arabidopsis includes a repressilator with additional feedback loops. Molecular systems biology 8, 574.

POSTNOV D.E., RYAZANOVA L.S. & SOSNOVTSEVA O.V. (2007). Functional modeling of neural-glial interaction. Bio Systems 89, 84-91.

PRIPLATA A.A., NIEMI J.B., HARRY J.D., LIPSITZ L.A. & COLLINS J.J. (2003). Vibrating insoles and balance control in elderly people. Lancet 362, 1123-1124.

PURCELL O., SAVERY N.J., GRIERSON C.S. & DI BERNARDO M. (2010). A comparative analysis of synthetic genetic oscillators. Journal of the Royal Society, Interface / the Royal Society 7, 1503-1524.

QIAN L., WINFREE E. & BRUCK J. (2011). Neural network computation with DNA strand displacement cascades. Nature 475, 368-372.

RABINOVICH M.I., AFRAIMOVICH V.S., BICK C. & VARONA P. (2012). Information flow dynamics in the brain. Phys Life Rev 9, 51-73.

RABINOVICH M.I., VARONA P., SELVERSTON A.I. & ABARBANEL HDI. (2006). Dynamical principles in neuroscience. Reviews of Modern Physics 78, 1213-1265.

RABINOVICH M.I., VARONA P., TRISTAN I. & AFRAIMOVICH V.S. (2014). Chunking dynamics: heteroclinics in mind. Front Comput Neurosc 8.

ROLLS E.T. (2007). An attractor network in the hippocampus: theory and neurophysiology. Learn Mem 14, 714-731.

ROSENBLATT F. (1958). The Perceptron - a Probabilistic Model for Information-Storage and Organization in the Brain. Psychol Rev 65, 386-408.

ROSENBLATT F. (1962). Principles of neurodynamics: perceptrons and the theory of brain mechanisms. Spartan Books, Washington.

ROSENBLUM M.G., PIKOVSKY A.S. & KURTHS J. (1996). Phase synchronization of chaotic oscillators. Physical review letters 76, 1804-1807.

RUSSELL D.F., WILKENS L.A. & MOSS F. (1999). Use of behavioural stochastic resonance by paddle fish for feeding. Nature 402, 291-294.

SAIGUSA T., TERO A., NAKAGAKI T. & KURAMOTO Y. (2008). Amoebae anticipate periodic events. Physical review letters 100, 018101.

SAKA Y., LHOUSSAINE C., KUTTLER C., ULLNER E. & THIEL M. (2011). Theoretical basis of the community effect in development. BMC systems biology 5.

SELKOE D.J. (2001). Alzheimer’s disease: genes, proteins, and therapy. Physiol Rev 81, 741-766.

SEMYANOV A. (2008). Can diffuse extrasynaptic signaling form a guiding template? Neurochem Int 52, 31-33.

SHATZ C.J. (1992). The Developing Brain. Scientific American 267, 61-67.

SKLAR L. (1997). In the wake of chaos: Unpredictable order in dynamic systems - Kellert,SH. Philos Sci 64, 184-185.